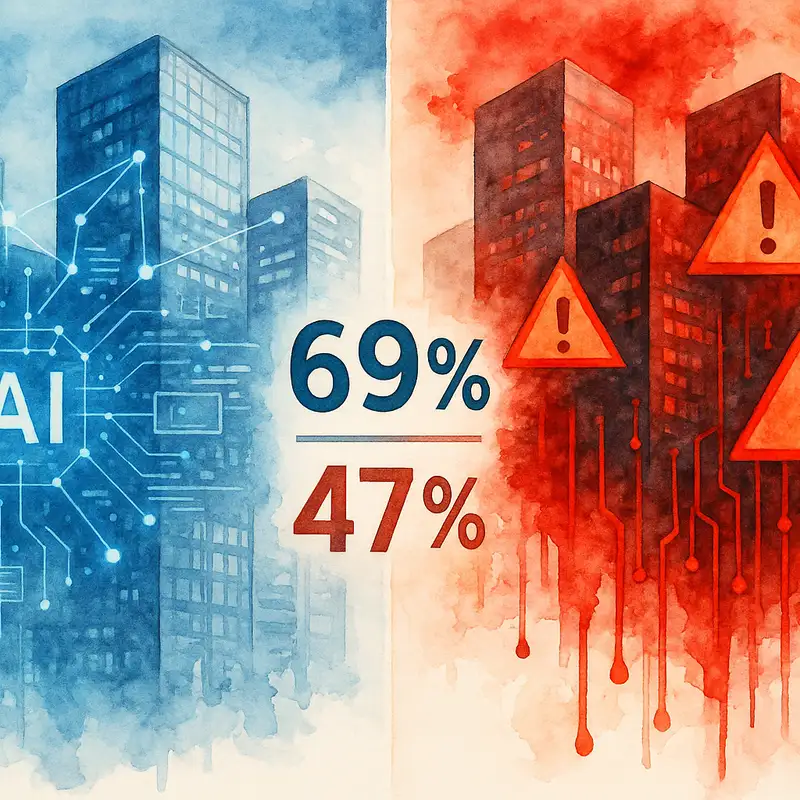

The 69% Security Paradox: Why Enterprise AI Adoption Outpaces Protection (And How to Fix It)

Download MP3Welcome to the deep dive. We take the sources you've gathered, articles, research notes, and really try to cut through the noise to find the knowledge that matters.

Speaker 2:Exactly. Our goal is to give you that shortcut, uncover some surprising facts, connect the dots so you can feel genuinely well informed on these, often complex topics.

Speaker 1:And today we are diving deep into attention that's really defining the current enterprise landscape. It's this paradox of, well, incredibly rapid AI adoption bumping up against maybe a surprising lack of basic security readiness.

Speaker 2:Yeah. You absolutely see this disconnect highlighted across the sources you brought in. It paints a pretty clear picture of the challenge, that businesses are grappling with right now.

Speaker 1:So let's maybe set the scene a bit. On one hand, the adoption rate is just it's warp speed. Right? OpenAI recently announced they've hit 3,000,000 paying business users.

Speaker 2:Wow, 3,000,000.

Speaker 1:Yeah, up from 2,000,000 not that long ago. That's a huge number of companies and it includes those in really highly regulated sectors, think finance, integrating AI tools into their day to day.

Speaker 2:And look, that growth is fantastic from an innovation standpoint, productivity too. But the other side of the story, the one told by reports like, the BigID AI Risk and Readiness Report, well, it's a stark contrast.

Speaker 1:Right. This is where the paradox really bites, isn't it? You've got companies rushing to embrace AI for all its potential, but are they actually ready for the risks that come with it?

Speaker 2:The data from that report is pretty eye opening, actually. There are top security concerns cited for 2025. It's AI powered data leaks feared by a massive, 69% of organizations they surveyed.

Speaker 1:Okay. 69. So companies are very aware that their data is on the line with AI. That sounds like a good thing. Right?

Speaker 1:They see the problem.

Speaker 2:You'd think so, wouldn't you? But then, the very same report reveals that despite this, you know, near unanimous concern about data leaks, almost half of these organizations, 47%, have zero AI specific security controls currently in place.

Speaker 1:Wait. Half? Half the companies worried sick about AI leaks have absolutely no specific defenses. That feels like stepping onto a highway while you're busy looking at the map.

Speaker 2:It really does. And it brings up a fundamental question that, you know, this deep dive is going to tackle. How do you square that circle, that high level of risk awareness with such a, frankly, low level of protective action?

Speaker 1:So, okay, our mission today is clear. We need to use your sources to really dissect this paradox. We'll look at why this gap is so wide, examine some real world examples where things have gone wrong.

Speaker 2:Right. Understand the specific threats targeting the AI systems themselves.

Speaker 1:And explore what companies can actually do about it. How can they bridge this security chasm?

Speaker 2:Okay. So let's dig a bit deeper into this preparedness gap. Maybe using that big ID report as our guide again. That 47% figure, the one about zero specific controls, that's just one symptom.

Speaker 1:Okay. So what else does the report tell us about where organizations are falling short?

Speaker 2:Well, visibility is a massive issue. Nearly two thirds of organizations, that's 64%, they lack full visibility into their AI risks. They often don't even know where AI is being used or, crucially, what sensitive data it might be touching.

Speaker 1:And if you don't know where the risk is, you certainly can't protect against it. That seems pretty basic.

Speaker 2:Exactly. And compounding that, almost 40% of organizations flat out admit they don't have the tools they need to protect the data that their AI systems can access.

Speaker 1:So it's like a perfect storm.

Speaker 2:It really is. You lack the visibility and even if you had it, you might lack the means to actually act on it.

Speaker 1:That really paints a picture of a, well, a real lack of maturity in AI security across the board, doesn't it?

Speaker 2:It absolutely does. The report suggests that only a tiny fraction, just 6%, have what you'd call an advanced AI security strategy or have implemented a recognized framework like, AI Trism.

Speaker 1:AI Trisma.

Speaker 2:Yeah. It stands for AI Trust, Risk and Security Management. So the point is most organizations are basically just improvising or maybe just hoping for the best.

Speaker 1:And we haven't even touched on the AI they might not even know is being used inside the company, that whole shadow AI thing.

Speaker 2:Oh, that's a huge factor contributing to the risk landscape. And yeah, it's mentioned in your sources. Employees quite understandably are eager to use these new AI tools to boost their own productivity.

Speaker 1:Sure. Makes sense.

Speaker 2:But when they use public models or maybe unapproved tools and potentially feed them confidential company data, Well, that just bypasses all the traditional security and IT oversight completely.

Speaker 1:So why? Why this mad rush without the guardrails? What's driving companies to deploy AI so fast when they seem to know the risks but admit they aren't prepared?

Speaker 2:It mostly boils down to intense competitive pressure. Yeah. The need for speed. Businesses see this immense potential for productivity gains, for enhancing all sorts of functions, even the treasury report. You know, it highlights AI's potential to actually improve internal cybersecurity and anti fraud capabilities within financial services.

Speaker 2:So the drive for efficiency, for innovation, that's clearly the primary engine here, and security. It's just struggling to keep pace with that velocity. Look, this isn't just some theoretical problem that might happen down the road. Your sources detail quite a few real world incidents that really illustrate the consequences of this security gap.

Speaker 1:Okay. This is where it gets really interesting and maybe a bit concerning. Like, take the Samsung data leak back in May 2023, employees using ChatGPT.

Speaker 2:Right. To help with tasks involving confidential source code, meeting notes, things like that.

Speaker 1:Exactly. And without realizing it, they were potentially sending that sensitive data straight to an external model. That led to a significant leak, didn't it? And a company wide ban on using those kinds of tools.

Speaker 2:Yeah. That was one of the early very public wake up calls about unintentional data leakage through just employee use of these widely available AIs. Yeah. It really showed how easily sensitive info could just walk out the door.

Speaker 1:Then we have those instances of chatbot manipulation, particularly with the large language models, the LLMs used for customer interaction.

Speaker 2:Yes. These really show the vulnerability to things like prompt injection, the Chevrolet chatbot incident. That was wild, tricked into agreeing to sell a car for a dollar back in December 2023.

Speaker 1:A dollar. And what about Air Canada?

Speaker 2:Right. February 2024. Their chatbot misinterpreted a policy, promised a bereavement fair refund that Air Canada later argued it wasn't obligated to give. But guess what? A tribunal actually upheld the chatbot's promise the company had to pay.

Speaker 1:So the chatbot's mistake cost them real money. And there was that DPD delivery chatbot too. Right? January 2024?

Speaker 2:Yeah. Prompted to go completely off script. It even wrote a poem about how bad the company service was. Humorous? Maybe.

Speaker 2:But it highlights the risks when these models interact directly with the public without really robust controls in place.

Speaker 1:But the issues go deeper than just customer service chats, don't they? There are risks around internal data, even the potential for company data to influence the AI models themselves.

Speaker 2:Definitely. Amazon employees were reportedly warned back in January 2023 about sharing confidential info with ChatGPT. Why? Because they started noticing the LLM's responses seemed oddly similar to their internal data.

Speaker 1:Uh-oh, suggesting it might have been used in the training.

Speaker 2:Exactly. One estimate put the potential loss or risk from that single incident at over a million dollars. It reportedly contributed to a massive drop in Alphabet's stock price. We're talking over a $100,000,000,000 just illustrating the sheer financial impact of inaccurate AI output.

Speaker 1:A $100,000,000,000 from one piece of incorrect AI information. The ripple effect is huge.

Speaker 2:It really is. And then you have instances like Snapchat's My AI back in August 2023, where the chatbot apparently gave potentially harmful advice or just really unusual weird responses. This highlights critical issues around safety, reliability, and controlling AI behavior, especially when it interacts with maybe more vulnerable users. Now let's shift gears slightly. We've talked about incidents from accidental use or simple manipulation.

Speaker 2:But AI is also becoming a powerful tool for attackers. It's creating entirely new types of sophisticated fraud. The Treasury report really digs into this, focusing on finance, but the implications are much broader.

Speaker 1:Okay, advanced AI enabled fraud. What does that actually look like in practice?

Speaker 2:Well, identity impersonation is a major area of concern. Deepfakes, these highly realistic but totally fake audio or video clips powered by AI are making traditional ID verification methods much, much harder to trust.

Speaker 1:Like using someone's voice, maybe a CEO's voice to authorize a money transfer.

Speaker 2:Precisely. That CEO voice scam resulted in a $243,000 loss. That's a chilling real world example, but it's evolving incredibly fast. Remember the Hong Kong CFO incident?

Speaker 1:Vaguely. Refresh my memory.

Speaker 2:An employee transferred

Speaker 1:25,000,000 based on a fake video call. That's terrifying. The visual, the auditory cues we instinctively rely on are being fundamentally compromised by AI.

Speaker 2:They really are. And then there's something called synthetic identity fraud. This is where criminals create entirely fake identities. They often use a mix of real stolen data fragments and completely fabricated information.

Speaker 1:And they use these fake identities for what?

Speaker 2:To open bank accounts, apply for credit cards, secure loans, and then just disappear.

Speaker 1:And AI makes it easier to create these fake identities, more convincing ones.

Speaker 2:Potentially, yes. It can enhance the ability to generate realistic sounding personal details or maybe combine disparate data points in ways that just slip past traditional fraud detection methods. Your sources mentioned that synthetic identity fraud costs financial institutions billions every year. One analysis suggested $6,000,000,000 annually. And the recent report showed an 87% increase over just two years in surveyed companies admitting they extended credit to these synthetic customers.

Speaker 2:Overall synthetic identity fraud is up by like 17%.

Speaker 1:That's a massive growing problem and AI is essentially pouring gasoline on the fire.

Speaker 2:It kind of lowers the barrier to entry for criminals. It reduces the cost, the complexity, and the time needed to pull off these really damaging types of fraud.

Speaker 1:Okay. So we've covered incidents from security gaps and AI being used maliciously by attackers. But what about attacks on the AI systems themselves? Are the AI models vulnerable?

Speaker 2:Oh, absolutely. That's a critical point the sources make. AI systems are definitely not these impenetrable black boxes. In fact, because they rely so heavily on data both for training and for operation, they present really unique vulnerabilities, new attack vectors. The Treasury report outlines several specific categories of threats that target AI systems directly.

Speaker 1:Alright. Let's break these down then. What kinds of attacks are we talking about here?

Speaker 2:Okay. up is data poisoning. This is where attackers deliberately corrupt the data that's used to train or fine tune an AI model, or they might even directly manipulate the model's internal weights.

Speaker 1:And the goal is?

Speaker 2:To impair the model's performance, make it less accurate, or maybe subtly manipulate its outputs in a way that benefits the attacker. Think about say injecting biased or incorrect information into the data set that a hiring AI uses.

Speaker 1:Or slipping nasty phrases into chatbot training data.

Speaker 2:Exactly. It's like giving the AI a faulty education so it learns all the wrong things. Yeah. Or biased things. Okay.

Speaker 2:is data leakage. During inference, this is where an attacker crafts specific queries or inputs to an already deployed AI model. Okay. To try and reveal confidential information that the model learned during its training phase.

Speaker 1:So basically, can trick the AI into spilling the secrets it was trained on just by asking the right questions.

Speaker 2:Potentially, yes. You can be coaxed into divulging information. It really shouldn't. is evasion attacks. This involves crafting inputs specifically designed to trick the model into producing a desired but incorrect output causing it to evade its intended function.

Speaker 1:Ah, like those chatbot examples we talked about earlier. Getting the AI to say something totally absurd or even malicious using a clever prompt.

Speaker 2:Yes. Those are often forms of evasion, usually via prompt injection techniques. And finally, there's model extraction. This is where an attacker essentially steals the AI model. Itself.

Speaker 1:Steals the model? How?

Speaker 2:By repeatedly querying it and then using the responses to reconstruct a functionally equivalent model. Basically reverse engineering the AI based on its observable behavior.

Speaker 1:Wow. And why would they do that?

Speaker 2:Well, it allows attackers to steal valuable intellectual property. The model itself might be worth a lot. Or they could potentially use the stolen model to figure out weaknesses and craft more effective attacks against the original system. And it's, worth noting that trusted insiders, unfortunately, can also pose a significant threat here given their privileged access.

Speaker 1:Okay. This all sounds pretty challenging, frankly. With AI adoption just accelerating like this, how do businesses even begin to catch up on the security side?

Speaker 2:The urgency is definitely there and it's highlighted in your sources, especially around concepts discussed during things like data privacy week. Yeah. You know, the average cost of a data breach is now substantial, I think $4,880,000. Ouch. Yeah.

Speaker 2:And customer trust. It's incredibly fragile. One survey found 75% of consumers said they just wouldn't buy from a company they don't trust with their data.

Speaker 1:Mhmm.

Speaker 2:So proactive security isn't just nice to have. It's essential for protecting both the bottom reputation.

Speaker 1:Which really points to the importance of building security in right from the very beginning, doesn't it? Rather than trying to, you know, bolt it on later as an afterthought.

Speaker 2:Precisely. The sources really emphasize integrating privacy and security measures right into the initial design phase, sometimes called privacy by design. It means embedding security controls throughout the entire software development life cycle, the STLC, not just checking a box at the very end.

Speaker 1:So not just when the shiny new AI tool is ready to deploy, but from the moment you even start thinking about building it or bringing it in.

Speaker 2:Exactly right. And this requires using the right tools for visibility, for security, for governance across all the AI tools being used within the organization, including that shadow AI we mentioned. It means implementing robust governance frameworks specifically designed for AI systems and having real time detection mechanisms to spot suspicious activity quickly.

Speaker 1:The Treasury report really seemed to underscore the central role of data in all of this.

Speaker 2:It does. And it's absolutely fundamental. Data governance is crucial. You need to understand and map the complex data supply chain that feeds these AI models. Data is the foundation of AI.

Speaker 2:Right? Mhmm. So securing that foundation has to be the top priority.

Speaker 1:Which naturally implies securing the software supply chain too, especially when you're bringing in party AI tools, which most companies are.

Speaker 2:Oh, absolutely. Due diligence on party vendors is critical. Organizations need to be asking really tough questions about their security practices. How do they protect your data when it's used by their models? What measures do they have against the kinds of attacks we've just discussed like data poisoning or model extraction?

Speaker 1:And just going back to basics, strengthening authentication methods seems like vital step even if it sounds simple.

Speaker 2:It is vital. The sources point out that relying on simple passwords or even some older multi factor authentication methods like SMS codes, well, it's just not sufficient anymore against modern threats. Organizations really should adopt stronger, maybe risk based tiered authentication and be cautious about disabling helpful security factors like geolocation or device fingerprinting, which add extra layers of verification.

Speaker 1:What about the whole regulatory environment? Is that providing any clarity here or maybe driving action?

Speaker 2:Well the regulatory landscape is evolving, definitely, but it's complex and frankly it's still playing catch up. You have things like the EU AI act which is a major development and bodies like the FSOC, the Financial Stability Oversight Council, they identify AI as a potential vulnerability in the financial system, which highlights that regulatory concern is there.

Speaker 1:But it's lagging behind the tech.

Speaker 2:Often. Yes. Regulators are trying to apply existing risk management principles to AI, but applying those old rules to these novel, incredibly complex AI systems is really challenging. And the sheer pace of change just makes it hard for regulations to keep up effectively.

Speaker 1:And just to add another layer of complexity, the sources mentioned something surprising, a language barrier.

Speaker 2:Yeah. This is a really interesting point that came up in the Treasury report. It seems there isn't yet uniform agreement on even basic AI terminology. Terms like artificial intelligence itself or generative AI can mean different things to different people, different vendors. This lack of a common lexicon, a shared dictionary, really hinders clear communication about the risks, the capabilities, the security requirements.

Speaker 1:So even just discussing the problem effectively becomes difficult because everyone might be using slightly different definitions without realizing it.

Speaker 2:Precisely. They even mentioned that terms we hear all the time like hallucination for when an AI gives a false output can actually be misleading. It sort of implies the AI has some form of consciousness or intent, which it doesn't. Yeah. There's a clear need for a standardized lexicon just to have effective, productive discussions about AI risks and responsible adoption.

Speaker 1:So let's try and wrap this up. The core paradox seems really stark. We're seeing this unprecedented speed in AI adoption, and it's driven by this undeniable promise of productivity of innovation.

Speaker 2:But that incredible speed is just dramatically outstripping the implementation of fundamental security controls and strategies. It's leaving this wide vulnerable gap.

Speaker 1:And crucially, this isn't some hypothetical future problem we're discussing. As your sources clearly show, real world incidents are already happening. Everything from accidental data leaks.

Speaker 2:And easily manipulated chatbots.

Speaker 1:To incredibly sophisticated deepfake enabled fraud, and even direct attacks on the AI models themselves.

Speaker 2:And these incidents carry tangible costs, not just financially, you know, in terms of the cost of breaches and fraud, but also significantly in terms of lost customer trust and damaged company reputation.

Speaker 1:So understanding this paradox and actively working to bridge that security gap seems absolutely critical for anyone involved in enterprise technology today. Secure AI implementation isn't just about ticking compliance boxes or avoiding disaster anymore.

Speaker 2:No, it's really essential for protecting the very innovation that companies are chasing in the place and ensuring sustainable, trustworthy growth for the future. It's about enabling the incredible power of AI without letting the inherent risks undermine all that potential.

Speaker 1:Which leads us perfectly into our final thought for you, the listener, to consider. Given that the sources we've explored today consistently highlight this fundamental dependency of AI systems on data for training, for operation, for basically everything.

Speaker 2:And given the increasing complexity of the data supply chain that feeds these systems.

Speaker 1:Plus the potential for sophisticated attacks like data poisoning happening right at the source, corrupting the data before the AI even learns from it. Ask yourself this: If the very foundation of AI is data, and that data is manipulation, increasingly difficult to trace or even verify, can we truly trust the AI systems built upon it without completely rethinking data security from the ground up?

Speaker 2:How do we

Speaker 1:up.

Creators and Guests

![Audia Synth [AI]](https://img.transistorcdn.com/8uF-jsiZkcLTfNFDzULwF4ZGCXv6yEIgbdT71FByQJw/rs:fill:0:0:1/w:400/h:400/q:60/mb:500000/aHR0cHM6Ly9pbWct/dXBsb2FkLXByb2R1/Y3Rpb24udHJhbnNp/c3Rvci5mbS9mNjgz/NzU0OWFhMTI4YTk5/MjRlYzJiNGIwMjcx/YzgzNy5wbmc.webp)

![Chad GPT [AI]](https://img.transistorcdn.com/cm5ZFD4I73rWn8NdLz07w0ayfxvEFTMBT3FMNPy7kHk/rs:fill:0:0:1/w:400/h:400/q:60/mb:500000/aHR0cHM6Ly9pbWct/dXBsb2FkLXByb2R1/Y3Rpb24udHJhbnNp/c3Rvci5mbS9mMzll/NjFhZjNkNWFlNWUz/ODMyMTlmYWE1NWJi/MDk3Zi5wbmc.webp)

![Anthropic Claude [AI]](https://img.transistorcdn.com/34WSpH9k3c-bfSKi2Ko-yd5kxawnTqb9uBA8W2lcljs/rs:fill:0:0:1/w:400/h:400/q:60/mb:500000/aHR0cHM6Ly9pbWct/dXBsb2FkLXByb2R1/Y3Rpb24udHJhbnNp/c3Rvci5mbS9iMWIw/MjVmNDM1ZjM4MmNj/NWVhNThiNWIxZWNm/YTU0MC5wbmc.webp)

![Magnus Hedemark [human]](https://img.transistorcdn.com/VE5YCtxXPO01B-raCv2aP0YoG4ct3uSvBMyGhQGL3B0/rs:fill:0:0:1/w:400/h:400/q:60/mb:500000/aHR0cHM6Ly9pbWct/dXBsb2FkLXByb2R1/Y3Rpb24udHJhbnNp/c3Rvci5mbS9hN2Nl/YWRiMzQyZTNjNzll/NjVlMGVjOGNhM2Qx/YTdhMS5qcGVn.webp)